//6 min

Whether it’s Netflix or Spotify, behind every widely used media streaming service there is an AI system that keeps users engaged through personalized recommendations. The strategy most recommendation systems follow is to suggest content that is either similar to content previously consumed by the targeted user or was consumed by similar users.

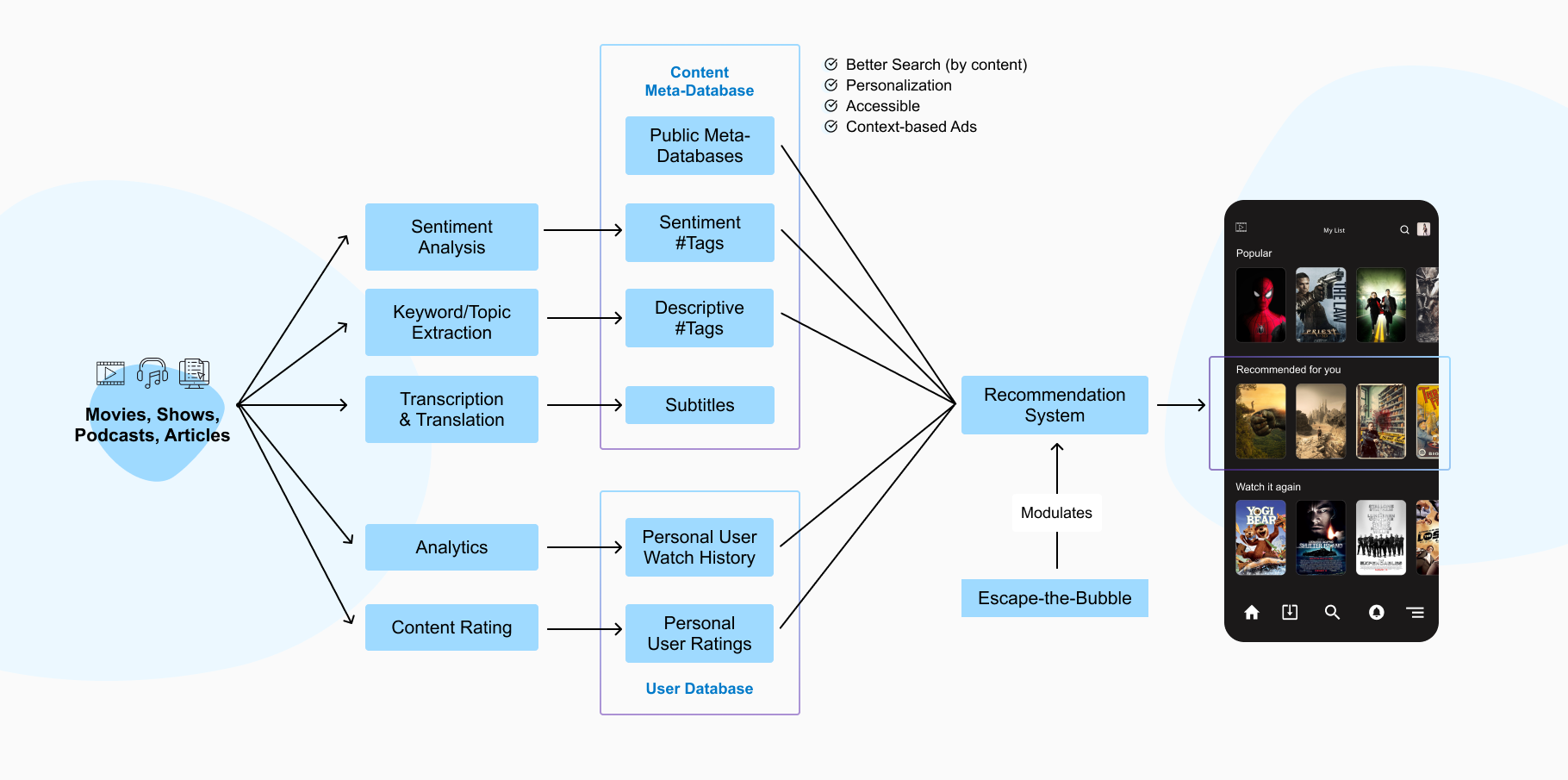

However, the power of AI can be leveraged in a whole host of other ways when it comes to media and telecommunications:

Auto-Tagging

By automatically analyzing the content of articles, images, videos, and podcasts, AI can extract themes, topics, key phrases, and even sentiments. By using these aspects as tags on the respective media files, very rich metadata bases can be built that can enable sophisticated semantic search and discovery of media based on its actual content.

For example, one might search for “Einstein” and get results including a video documentary about the greatest physicists in history, an article on how the theory of relativity enables GPS, and a podcast talking about the fathers of the atom bomb. What is crucial is that no editor has to watch, read, or listen to the content and manually add the "Einstein" tag to their respective metadata. Instead, AI automatically finds mentions of and references to Einstein within these pieces of media. And while human editors could have done that in principle, they certainly couldn’t have done it as quickly, as cheap, and as extensively as AI can. Instead, they can now use this AI-driven powerful media search engine to find and select the content they are looking for and focus on the more creative process of curating and producing content.

Additionally, tags are not only generated automatically but also localized within the piece of media, such as a timestamp in a video or a specific position within the text of an article. Being able to localize tags opens up new opportunities like context-based advertisement. For example, in a podcast that talks about outdoor sports, an ad for hiking shoes could be played right after a section about hiking. Again, this is not a new concept, but what is game-changing is that the selection of which ad to match with which piece of media and at which point in time is done fully automatically and at a high scale and resolution.

Auto-Transcription

Where there is spoken language there is a transcription use case, be it in films, podcasts, news bulletins, interviews, or talk shows. Any spoken word can be transcribed into written text, either offline by processing the respective media file or live in real time. Automatically generating subtitles is an obvious and valuable use case already implemented in many services like YouTube (offline) or MS Teams (live). If multiple voices are involved, then speaker indexing can identify the speaker alongside the transcription and reveal who said what. Typical use cases are automatic transcription of interviews or automatic minute-taking for online conferencing.

Auto-Translation

Of course, once the language of a piece of media is available in written form – either because it is the original form, e.g. an article, or because it has been transcribed from audio or video – then automatic translation into almost any language becomes feasible. The potential impact is tremendous: upon creation, a separate version of any piece of media can be produced in any desired language and provided automatically. This revolutionizes video-conferencing by allowing participants to speak in their native tongue and be live-translated and subtitled individually for all participants into their respective languages.

Voice Cloning

Additionally, recent advancements in speech synthesis allow for “cloning” the natural voice of a real person while respecting aspects like intonation, melody, pauses, rhythm, inflection, pitch, pace, accent, emotional charge, and all the other characteristics that make a voice unique.

The potential applications are endless, including producing advertisements, audiobooks, documentaries, and speeches using the voices of popular actors, celebrities, or narrators – be they alive or deceased. Other use cases could comprise automatically dubbing movies with the voice of either voice actors or even the original cast in any target language, or live-translating a native speaker while preserving their voice, for example in the context of video-conferencing or public speeches.

Another completely different use case can be found in the medical field. Voice Cloning holds the power to assist people who, be it through an accident or illness, are no longer able to speak and have to use speech synthesis apps to communicate. Hearing their own voice come out of such an app, generated with the help of a past recording of their voice, could be life-changing. However, it’s worth noting that, while rich in potential use cases, Voice Cloning clearly bears a certain risk of abuse and must be applied responsibly, ethically, and legally.

Escaping the Bubble

So, what does “personalized” really mean? In simplified terms, it translates to “content that has a high likelihood of being consumed by the user.” However, this isn't the same as having a high likelihood of being liked by the user. Most users don’t rate the content they have engaged with (hit the like/dislike button), so often the only indicator that users appreciated what they have consumed is the fact that they simply consumed it. However, consuming a piece of content does not automatically mean they like it, sometimes it has more to do with habit than with active enjoyment. In fact, some content can produce a negative experience for the user rather than a pleasurable or positive one, but it gets consumed anyway.

But, even if users have a healthy relationship with the media they consume based on automatic recommendations, there is another danger lurking: the bubble. The more users consume suggested content, the more the system is encouraged to recommend similar content, and therefore the more uniform the content becomes. These dynamics enter a spiral that reduces content diversity and the imaginary bubble encompassing the user’s experienced media universe shrinks.

However, the same technology that is creating this problem can also remedy it. With AI, content that lies beyond the boundaries of the user’s bubble is identified and can be sneaked into the set of “in-bubble” recommendations to gently but deliberately nudge the user to explore media that broadens their horizon. With carefully thought-through UX design the user might even be put in control of modulating how much “out-of-bubble” content they would like to explore and enter some kind of “adventure” mode.

At intive, we are both design and AI-led. We thoroughly explore, design, implement, and productionize next-level features and use cases with cutting-edge data-driven technologies. We make use of our vast collective experience and the synergies that emerge from engagements across all industries.

We have the insight and the capabilities to combine best-of-breed off-the-shelf services with custom implementations to build reliable and scalable solutions effectively. To deeply understand our clients’ needs and identify those solutions we offer fast-paced high-intensity discovery workshops as well as proofs-of-concept which may or may not transition into fully-fledged projects.

To find out more about what AI can do for you and your customers, get in touch with intive today.